08/22/2024 by Michael Adams

Breaking Down Artificial Intelligence (AI)

Artificial Intelligence (AI). You see it mentioned all over the news headlines. You overhear your coworkers discussing it in the breakroom. Even your family members bring it up at get-togethers.

Much like when the internet first came to be, people are both amazed and uncertain about it. I often hear and see the same questions come up. What is the history of AI? When did it start? What exactly is AI? Is it just ChatGPT? What kinds of AI are there? Will AI take my job from me? Will AI take over the human race? (Definitely no to the last one!)

As someone who works in the technology field, I’d love to answer those exact questions for you and share some of my own thoughts on AI.

What is Artificial Intelligence?

Let’s answer this question first. Artificial intelligence is the science of making machines think, process, and create like humans. It has become a term applied to applications that can perform tasks a human could do, like analyzing data or replying to customers online.

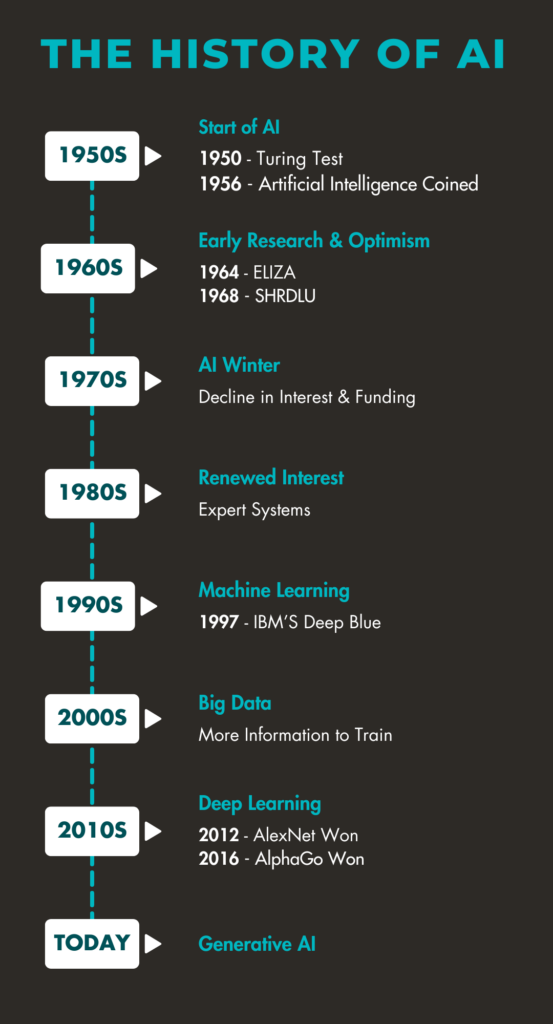

The History of Artificial Intelligence

You might be surprised to learn that AI has existed for a while.

1950s

The Start of AI

The first application of artificial intelligence was the Turing Test. In 1950, Alan Turing tested a machine’s ability to exhibit behavior equal to a human. The test was widely influential and believed to be the start of AI.

In 1956, “Artificial Intelligence” was officially coined by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon at the Dartmouth Conference. The conference is seen as the founding event of AI.

1960s

Early Research and Optimism

Early AI programs began to develop during this time. Computer scientists and researchers eagerly explored methods to create intelligent machines and programs.

Joseph Weizenbaum created ELIZA, a natural language processing program to explore communication between people and machines. Later, Terry Winograd created SHRDLU, a program that understood language in a restricted environment.

1970s

The AI Winter

Early enthusiasm from the 1950s and 1960s fell due to limited computational power and unrealistic expectations. There was a significant decline in interest and funding for AI, so projects fell by the wayside. You’ll often see this time in history called the “AI Winter.”

1980s

Expert Systems Bring Renewed Interest

Despite the slowdown, some projects continued, albeit with slow progress. Expert systems, designed to mimic human decision-making abilities, developed and were a turning point in AI. These systems proved that AI could be used and beneficial in businesses and industries. Many commercial fields, such as medicine and finance, began using expert systems.

1990s

Machine Learning and Real-World Applications

Here’s where AI started gaining momentum. During this time, we shifted from rule-based systems to Machine Learning. Machine Learning is just that – a machine or program that can learn from data. We see a lot of Machine Learning in today’s applications, like self-driving cars or facial recognition.

Machine Learning developed so well that in 1997, IBM’s Deep Blue became the first computer system to defeat world chess champion Gary Kasparov. This moment showcased AI’s potential for complex problem-solving and ability to think like a person.

2000s

Big Data Offers AI Advancements

Up until now, AI was limited by the amount and quality of data it could train and test with Machine Learning. In the 2000s, big data came into play, giving AI access to massive amounts of data from various sources. Machine Learning had more information to train on, increasing its capability to learn complex patterns and make accurate predictions.

Additionally, as advances made in data storage and processing technologies led to the development of more sophisticated Machine Learning algorithms, like Deep Learning.

2010s

Breakthroughs With Deep Learning

Deep Learning was a breakthrough in the current modernization of AI. It enabled machines to learn from large datasets and make predictions or decisions based on that. It’s made significant breakthroughs in various fields and can perform such tasks like classifying images.

In 2012, AlexNet won, no dominated, the ImageNet Large Scale Visual Recognition Challenge. This significant event was the first widely recognized successful application of Deep Learning.

In 2016 Google’s AlphaGo AI played a game of Go against world champion Lee Se-dol and won. Shocked, Se-dol said AlphaGo played a “nearly perfect game.” Creator DeepMind said the machine studied older games and spent a lot of time playing the game, over and over, each time learning from its mistakes and getting better. A notable moment in history, demonstrating the power of reinforcement learning with AI.

2020s

Generative AI

Today’s largest and known impact is Generative AI, able to create new things based on previous data. There’s been a widespread adoption of Generative AI, including in writing, music, photography, even video. We’re also beginning to see AI across industries, from healthcare and finance to autonomous vehicles.

Common Forms of AI

Computer Vision

Computer Vision is a field of AI that enables machines to interpret and make decisions based on visual data. It involves acquiring, processing, analyzing, and understanding images and data to produce numerical or symbolic information. Common applications include facial recognition, object detection, medical image analysis, and autonomous vehicles.

Honestly, Computer Vision is my favorite field of AI. I’ve had the opportunity to work on some extremely interesting use cases of Computer Vision. One was using augmented reality lenses (like virtual reality goggles) to train combat medics using Computer Vision. The Computer Vision with augmented reality added a level of realism to the virtual training, which used to be unattainable. While I would love to go into further detail about what the AI looked like, I signed a non-disclosure agreement. You’ll just have to take my word that it was really cool!

Machine Learning (ML)

Machine Learning is a subset of AI that allows computers to learn from and make predictions or decisions based on data. It encompasses a variety of techniques such as supervised learning, unsupervised learning, reinforcement learning, and semi-supervised learning. Common applications range from recommendation systems (like Netflix), fraud detection, predictive analytics, and personalization.

Deep Learning

Deep Learning is a subset of machine learning. It involves neural networks with many layers (deep neural networks) that can learn from large amounts of data. It enables machines to learn features and representations from raw data automatically. Key components include convolutional neural networks (CNNs), recurrent neural networks (RNNs), and generative adversarial networks (GANs).

Natural Language Processing (NLP)

Natural Language Processing (NLP) is a branch of AI that focuses on the interaction between computers and humans through natural language. It enables machines to understand, interpret, generate, and respond to human language. Common applications include machine translation, sentiment analysis, chatbots, and speech recognition.

Generative AI / ChatGPT

Generative AI refers to AI models that can generate new data like the data its trained on. This includes text, images, music, and more. ChatGPT, a specific (and well known) application of Generative AI, is a language model developed by OpenAI that can generate human-like text based on the input it receives. It uses Deep Learning techniques to produce coherent and contextually relevant responses, making it useful for applications like conversational agents, content creation, and more.

AI is Awesome BUT It Has Some Setbacks

Don’t Worry, AI is Not Going to Take Away Your Job

Artificial intelligence has some great benefits, like processing and analyzing data in minutes, but it’s not perfect. It’s still not HUMAN, it’s not you, and that is exactly why it won’t replace you in your job.

Take a lawyer for example. AI has been known to complete the bar exam in the 90thpercentile. Awesome, right? But that doesn’t mean AI is going to perform better than an actual lawyer in a case. It just means the AI is better at answering the test because of its training with the text.

AI is very good and fast at anything with text, but it’s terrible at motor functions and mimicking a person in a non-scripted environment. Like with Generative AI – at some point I know you’ve seen or heard something created by it and have had the thought, “This is definitely AI.”

Ethical Components

As AI continues to be adopted and widely used, I believe it needs legislation around it. For example, do people need to state they are using AI for their work? Can they still claim it’s something created by them if AI created a part or even all of it? Who gets the credit – the person or the machine?

There’s also the concern with creators being miscredited or violations with copyright. There’s been plenty of cases or news headlines in which AI has learned from artists and essentially recycled their work in a slightly new form. Is that a form of stealing?

AI Can Be Volatile

The development of AI is happening fast. Tomorrow, your current AI platform could be outdated. Things constantly change week to week. The features you might love now could be cut and replaced with something new. It can be hard for people and their own Technology Teams to keep up! And did I mention its hallucinations? Sometimes it likes to make up its own false information, so you should always doublecheck its results!

My Closing Thoughts

AI is real, it’s been here, and is both impressive and scary. It’s also very trendy to mention in any news article or headline, which is why you’re seeing it mentioned a lot. Nor, again, is it coming for your job anytime soon. While some articles may have you believe it will provide us world peace by the end of the year, it is still limited in capability.

This is not to understate the need for legislation so that it is responsibly used, but rather, a presentation of the facts of AI’s impressive feats, and numbered flaws. It’s important to remember that while things like ChatGPT and Generative AI are newer, we have a long history of AI development, going back nearly as far as computers, and likely, further back than many of our lifetimes.

Get More Content Like This In Your Inbox Learn More About Trinity Logistics Learn About Trinity's TechnologyAbout the Author

Michael Adams is a Data Scientist I at Trinity Logistics. Adams holds a Master’s Degree in Data Science and has worked three years in the field, including his 2 years at Trinity. In his current role he focuses on applying Data Science techniques and methodologies to solve difficult problems, using AI to improve business outcomes, and supporting Trinity’s Data Engineering initiatives to improve quality assurance, ETL processes, and data cleanliness.

Outside of his role at Trinity, Adams has a couple of personal Computer Vision and AI projects of his own! When he’s not tinkering away at those, he enjoys being outdoors, either hiking or kayaking!